Two recent studies give further evidence on how A.I. can draw sexist and racist results from data.

Images of women received three times more annotations related to physical appearance

The word smile is innocuous enough. But it is open to interpretation; is it really that simple, maybe it was a scowl? If teeth are not visible, does that count? Dental health issues may mean some people deliberately don’t show their teeth when smiling — think of Freddie Mercury covering his mouth during interviews. Smiling is so open to misinterpretation that maybe it is an area best left alone when examining people's images in the form of data.

Nonetheless, it turns out that machine learning is more likely to apply the word smile when describing a women than a man. At least, that was one of the conclusions from a study carried out by researchers, including Carsten Schwemme, from Leibniz-Institute for the Social Sciences when “using photographs of U.S. members of Congress and a large number of Twitter images posted by these politicians.”

Their conclusion: “Images of women received three times more annotations related to physical appearance. Moreover, women in images are recognised at substantially lower rates in comparison with men.”

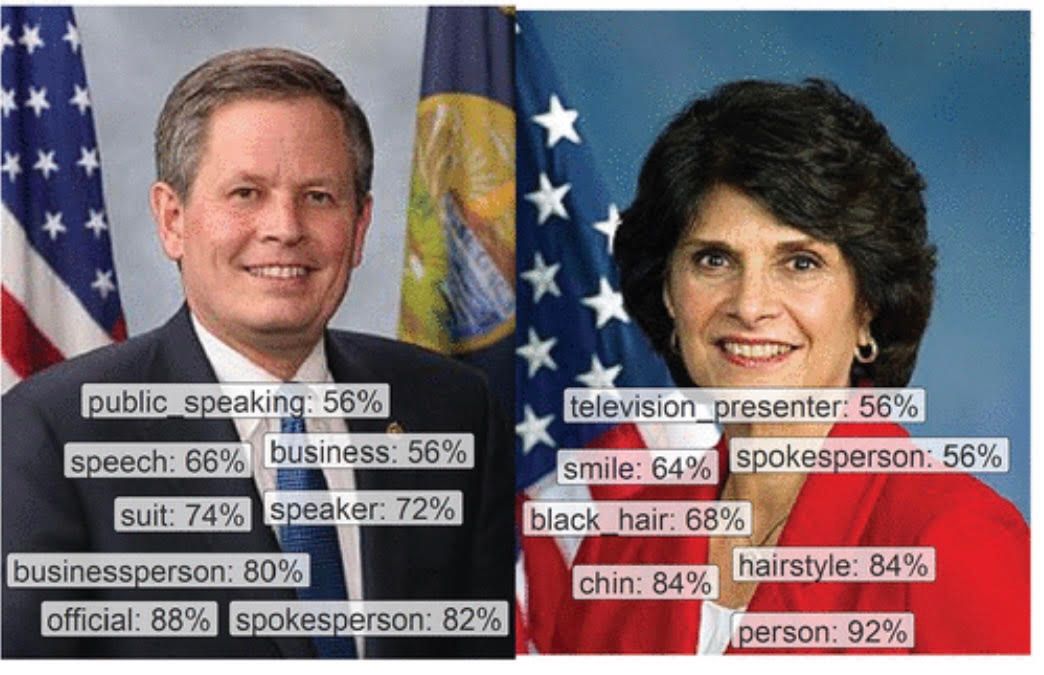

The above image shows Steve Daines, a Republican senator from Montana. On the right is Lucille Roybal-Allard, a Democratic representative from California’s 40th congressional district. The percentage scores relate to confidence levels assigned by A.I. applying Google Cloud Vision.

It turns out that the A.I. algorithm is more likely to apply labels associated with beauty, such as reference to hair and physical traits, to women rather than men.

The researchers say: “Labels most biased toward men revolve around professional and class status such as ‘gentleman’ and ‘white-collar worker.’ None of these individual labels is necessarily wrong. Many men in Congress are business people, and many women have brown hair. But the reverse is true as well: women are in business, and men have brown hair.”

It turns out that images of women receive about three times more labels categorised as “physical traits & body” than men; and men, around 1.5 times more labels categorised as ‘occupation’ than women.

Meanwhile, another study, led by Ryan Steed from Carnegie Mellon University, focused on unsupervised machine learning trained on images from ImageNet, an image database organised according to the WordNet hierarchy.

His conclusion: “When compared with statistical patterns in online image datasets, our findings suggest that machine learning models can automatically learn bias from the way people are stereotypically portrayed on the web.”

AI. can counteract bias by nudging us to consider alternative views to those expressed by a politician or from an individual we follow on social media. But if the A.I. is as biased as humans, this could be self-defeating.

The Steed study’s starting place was that humans are susceptible to bias, as is well documented in psychology research. His finding is that similar bias applies to A.I. A.I. is, for example, more likely to assign sexualised categorisation to women — applying words such as bikini.

The researches selected five male and five female artificial faces from a database.

Quoting from the study, they “decided to use images of non-existent people to avoid perpetuating any harm to real individuals. We cropped the portraits below the neck and used iGPT to generate eight different completions.”

And the finding: “We found that completions of woman and men are often sexualised: for female faces, 52.5 per cent of completions featured a bikini or low-cut top; for male faces, 7.5 per cent of completions were shirtless or wore low-cut tops, while 42.5 per cent wore suits or other career-specific attire. One held a gun.”

AI can combat child abuse and make every day Safer Internet Day

Bias and overcoming it may turn out to be one of the most important challenges of the digital age. We understand how echo chambers, groupthink and social polarisation can exaggerate bias amongst the public. Maybe, in due course, AI can counteract this by nudging us to consider alternative views to those expressed by a politician or from an individual we follow on social media. But if the A.I. is as biased as humans, this could be self-defeating.

Social media is screaming extremism and revolution, is it time for censorship and to fight bias?

Then again, as they say, garbage in garbage out — it’s not the AI that is biased; it merely learns from data. The problem is the data itself, which is often originated by humans. A.I. can, if we are not careful, echo our own biases across a chamber made of ether, with ramifications as serious as you can imagine.

Related News

Rising AI Emissions and What to Do About Them

Jul 19, 2024

If this is an AI bubble, then heed the lessons of the dotcom bubble

Apr 07, 2024

The AI revolution is here

Jan 25, 2023